I came across a very interesting ethical thought experiment the other day. It’s called the “Trolley Problem”. It goes like this.

You’re standing by a railway line and you spot a runaway train (or trolley, but that sounds too much like you’re in the supermarket and much less dangerous!). You look down the tracks and can see five workmen standing on the rails who’ve obviously not seen the train. They are too far away to hear you and are not looking in your direction so you can’t signal them. You then notice you are standing beside a lever which controls a set of points. If you pull the lever the train will be diverted down a side line. But on the side line you can see a single worker who again hasn’t seen the train and is too far away to warn.

What do you do?

If you do nothing the five workmen die. If you pull the lever the single workman dies but the other five survive.

An Ethical Dilemma

So what did you choose?

There’s no right or wrong answer. It all depends on your personal ethics. Pulling the lever results in one man dying and five surviving. From a pure numbers point of view this makes perfect sense.

But the act of pulling the lever means that you have intervened in the situation. You are now responsible for killing an innocent man to save the lives of others who would have died if you weren’t there.

Most people choose to pull the lever to reduce the death toll.

Upping the Ethical Stakes

But now let’s consider a slightly different situation.

It’s the same idea with the runaway train and the five workmen facing certain death. But this time there is no lever. But it just so happens you’re standing beside a very large gentleman. You quickly do the calculations in your head and work out, with absolute certainty, that if you push the man in front of the train his body will bring it to a stop before it hits the workmen.

So what do you do in this situation?

From a numbers point of view this is exactly the same outcome. Either the five men die or you sacrifice one man to save them.

But now you’ll find most people won’t push the gentleman in front of the train. Even though you’re still killing one to save five it’s all got a bit more personal. It’s not just pulling a lever and letting the mechanics take over. You are now physically grabbing hold of the man and pushing him to his death. Emotionally (not sure if that’s quite the right word!) this is much harder to do.

The BBC made a really good video showing this problem.

So What’s This Got to Do with Artificial Intelligence?

You might think that artificial intelligence making life or death decisions for us is decades away. But it’s actually just round the corner, literally. Most major car manufacturers expect to have fully automatic, self-driving cars on sale around 2020. Some taxi firms are hoping to have self-driving taxis even sooner.

The trolley problem got me thinking about how these cars are going to behave when an accident becomes unavoidable. Perhaps there is a brake failure in the car, the steering locks up, or someone just jumps out in front of you. Whatever the situation it isn’t hard to imagine that at some point the car’s artificial intelligence brain will have to choose between the safety and survival of the people in the car against those that the car might hit.

So what will the car choose?

Should the car always prioritise its owner’s safety even if that means ploughing into a crowd of pedestrians?

Should the car try to calculate the probable death tolls and base it’s decisions on that?

Should the car prioritise the safety of children over the elderly?

Should certain people or professions be deemed more valuable? The Prime Minister, President, Queen? Heart surgeon, judge, web site designer, investment banker (only joking – they’d be the bottom of the list)?

Should your boss get precedence over you?

Can you put a value on lives so that they can be compared?

Software Upgrades

What about software options? Should Mercedes or BMW be able to offer the ultimate executive upgrade to preserve your life whatever the cost?

Should all cars be programmed as selfish to stop you being able to buy a ‘safer’ car?

Will Ferraris be programmed to take more risks to go faster and create an exciting driving experience at the expense of safety?

Should we have an ethical safety knob so we can choose for ourselves? 0, save the most lives and trees. 10, **** everyone else, I want to live!

Who’s Making These Decisions?

At the moment I guess it’s the software engineers who program the AI brains. And I’d suspect during development they’re set to sacrifice the car rather than risk a media nightmare when a self-driving car kills a child.

But when the cars are released into the wild who then makes the rules?

Can we really just leave it to the programmers?

Will programmers need to take out high levels of professional insurance in the same way brain surgeons, cardiac surgeons and others who make life and death decisions have to?

Will there be legislation to enforce AI ethics?

It’s going to be interesting to find out.

What Next?

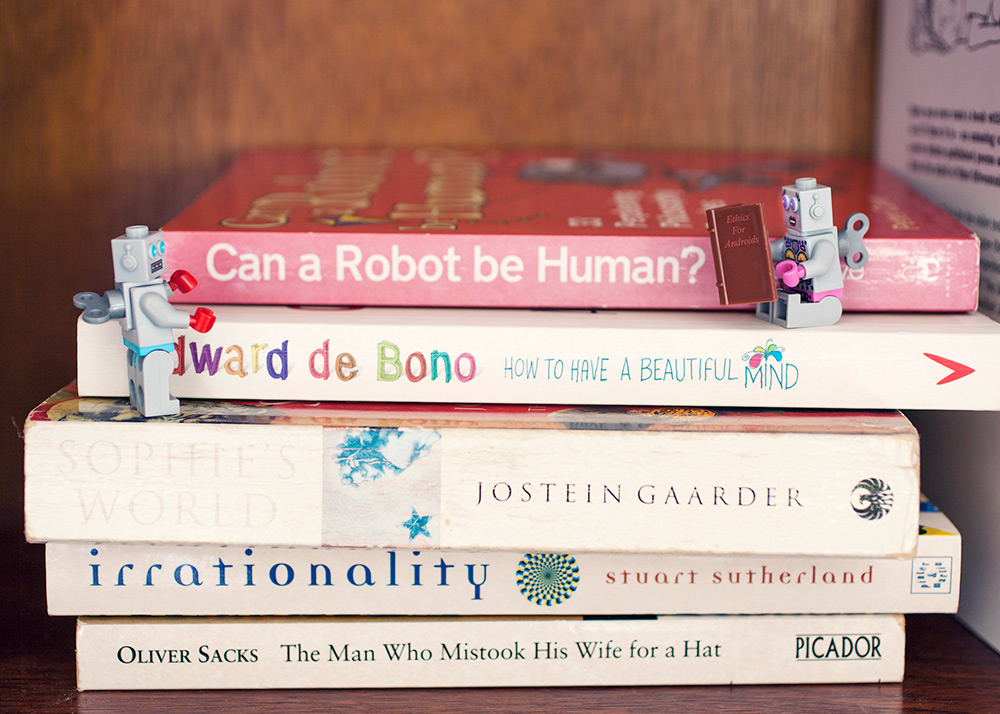

AI fascinates me. I think we’re living in very exciting times as this technology really takes off. Make sure you follow me or sign up for my newsletter for access to my latest posts.

And remember, not all AI is out to kill us!